Vision Transformers

Universal Interface

Artificial Intelligence

Universal Vision Transformer

GiT: A Generalist Vision Transformer Approach

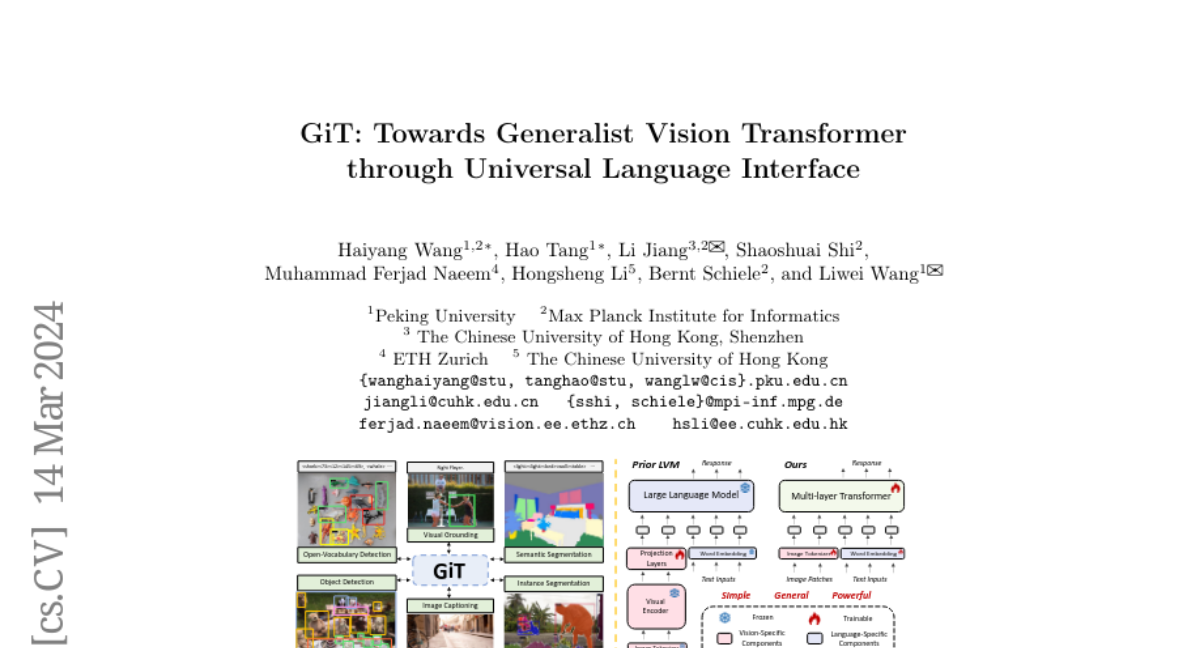

Haiyang Wang, Hao Tang, Li Jiang, and their team propose GiT, a Vision Transformer framework that unifies various visual tasks through a universal language interface. By removing the need for task-specific modules, GiT paves the way for a powerful, simplified visual foundation model applicable across multiple benchmarks.

- GiT’s innovative universal language interface enables a single model to perform a wide variety of visual tasks.

- Employs a generalist approach, being trained on five key datasets without task-specific modifications.

- Showcases mutual enhancement and robust zero-shot performance across tasks when enriched with diverse datasets.

The GiT model stands as a testimony to the potential of universality in AI architectures, demonstrating the possibility of a cross-disciplinary approach between vision and language tasks. It could serve as a pivotal foundation for future AI systems that require less specialization while offering broad capabilities.

Personalized AI news from scientific papers.