Vision Transformers

Universal Language Interface

Multi-task Models

GiT: Generalist Vision Transformer

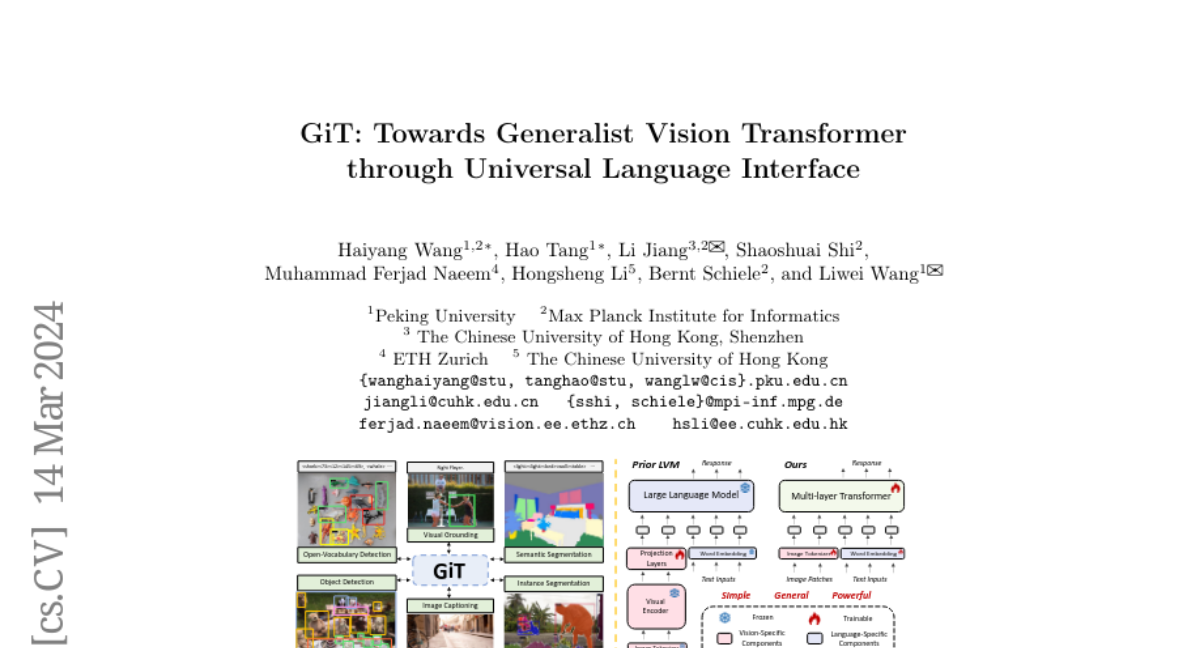

The paper, GiT: Towards Generalist Vision Transformer through Universal Language Interface, brings forth a framework that extends the versatility of the Vision Transformer (ViT) for multiple vision tasks.

- GiT pioneers the use of auto-regressive decoding to unify tasks such as captioning, detection, and segmentation.

- A single ViT model, free of task-specific modules, is trained jointly on five benchmarks leading to mutual enhancements and improvements.

- The model, when trained on an extended set of 27 datasets, demonstrates strong zero-shot performance across various tasks.

- GiT aligns with the effectiveness of large language models (LLMs) and presents a scalable architecture bridging the gap between vision and language tasks.

The generalist approach of GiT simplifies training and deployment processes and opens pathways for universal models capable of addressing a myriad of vision-related tasks, marking a significant stride towards an integrated visual-language processing in AI.

Personalized AI news from scientific papers.